Future-proofing Cybersecurity in Drug Discovery

The pharmaceutical and biotech sectors suffer more data security breaches than any other industry, with 53% resulting from malicious activity. To protect against potential ...

News

We released BlazeGPU a couple of years ago, allowing the full power of the Blaze virtual screening system to be used on a few consumer graphics cards rather than a full-scale Linux cluster. Since then, graphics cards and CPUs have only got faster, so we decided that it was time to update our benchmarks and see how well all of the new hardware performs.

For these benchmarks we took a random subset of 4,000 molecules from our in-house Blaze data set and searched with a medium-sized query molecule. The molecules in the data set average 80 conformers each. We’ve run with three different search conditions: the full slow-but-accurate simplex algorithm, the standard clique algorithm and the new fastclique algorithm. All of these were run with 50% fields and 50% shape.

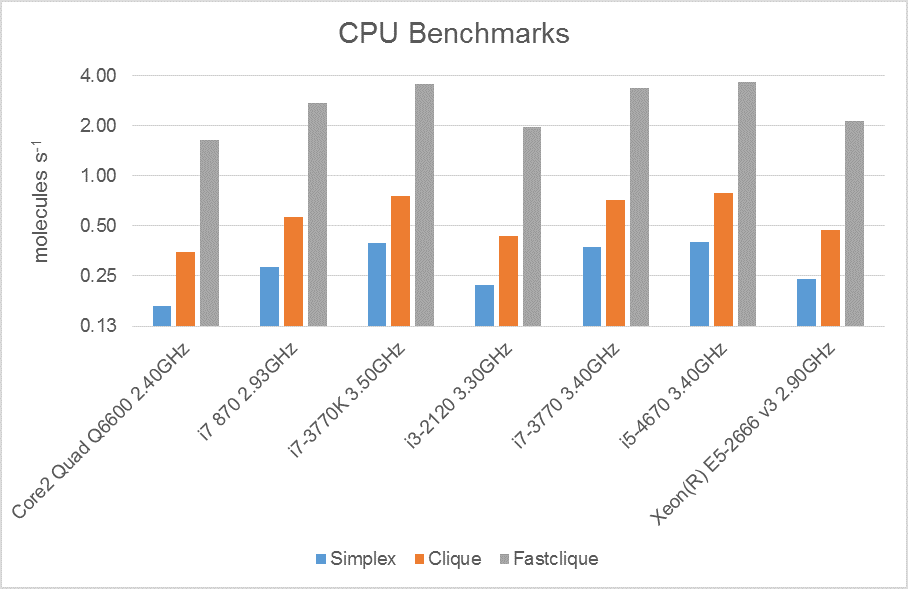

Firstly, the CPU benchmarks. All of these are single-core performance, but with all cores loaded so that we’re not benefitting from Intel Turbo Boost. In most cases Blaze will be saturating all cores, so this is representative of real-world performance. Note that the vertical axis is on a log scale.

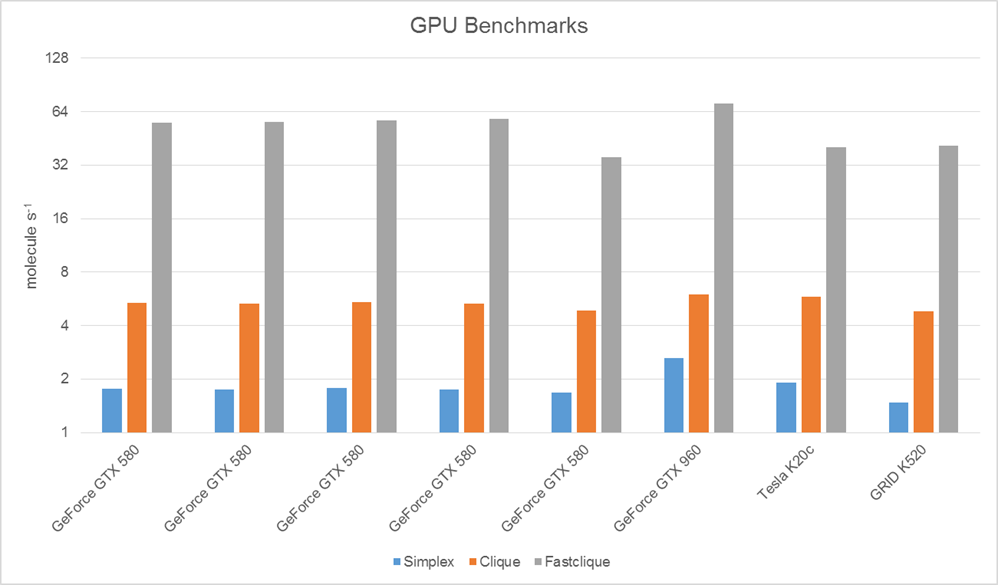

Moving on to the GPUs, we’ve tested the throughput on a variety of different systems. Firstly, we’ve tested a variety of GTX580s on different motherboards and processors. As you would expect, for the most part the performance is governed by the GPU, but the exception is the fifth test system which is noticeably slower than the others. That card is sitting in a much older chassis with an older motherboard and hence is probably suffering from lack of backplane bandwidth to the GPU.

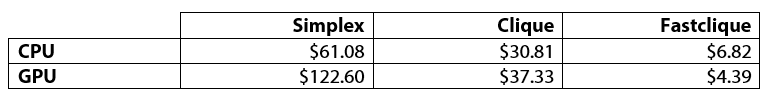

Converting the throughput shown above, we can look at the cost of screening on the Amazon cluster with Blaze. The raw cost to screen a million molecules is shown in the table. Note that the actual costs will be somewhat higher, due to job overheads and data transfer costs.

New hardware is significantly faster at running Blaze than old stock as would be expected. However, the speed increases are much lower than they have been in the past, with CPUs that are well past their best still performing adequately. On the GPU side, Blaze performs particularly well on commodity graphics cards leaving few reasons for us to invest in dedicated GPU co-processing cards.

The cost of running a million molecule virtual screen on the Amazon cloud has never been cheaper. If tiered processing is used as is the default for Blaze then these screens can be performed for a very low cost indeed – less than $15 per million molecules for the processing costs.

Contact us for a free evaluation to try Blaze on your own cluster, or Blaze Cloud.